What audio encoding of spatial information (for a whole room/scenario) best enables someone to be spatially aware of their surroundings?

Spring 2024 - Now

Active Contributors: Professor Philip S. Thomas Professor Ravi Karkar Professor VP Nguyen Tung Le Paul Davis Ryan Boldi

Codebase contribution: Write 2600+ lines of C# code across 32 files

Research Objective

This study investigates the optimal audio encoding methods for spatial information to enhance environmental awareness through sound. The research specifically focuses on developing and evaluating different approaches to convey room-scale spatial information through audio cues, enabling users to understand their surroundings without visual input.

Experimental Setup

The study utilizes wired headphones in a controlled indoor environment to ensure consistent motion controller tracking and audio quality. The testing environment consists of randomly selected preset rooms within a 12x12 foot boundary, incorporating a safety guard offset of 1-2 feet. This setup enables controlled testing while maintaining ecological validity.

Methodology

The research employs a comprehensive testing protocol divided into pre-test qualification and main test phases. The pre-test phase evaluates subjects' basic spatial audio perception abilities through various tasks, including pointing to static and moving audio sources in both 2D and 3D space. The main test phase involves three distinct scenarios: Stationary, Line, and Studio, where subjects navigate virtual environments and interact with objects using different audio encoding methods.

Audio Encoding Methods

Two primary encoding approaches were developed, each with three distinct variations: 1) Growing Sphere Method: Features time-based distance indication with three variations: basic timing, pitch-distance mapping, and pitch-elevation mapping. 2) Environmental Sound Method: Implements three different approaches to wall and object sonification: sequential sound emission, constant sound emission, and on-demand sound emission (echolocation-style).

Spatial-Audio Mapping

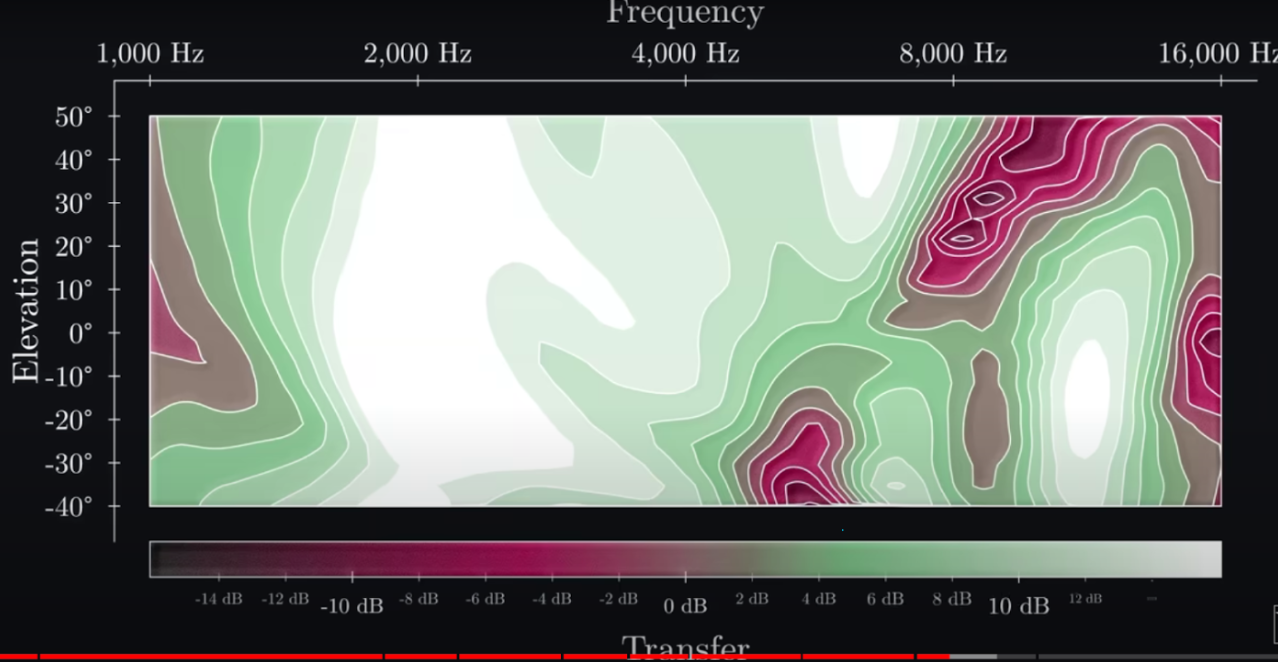

The study explores various mappings between spatial properties (distance, elevation) and sound properties (pitch, delay, amplitude). Multiple mapping configurations are investigated, including direct one-to-one relationships and more complex transformations. For example, specific implementations include mapping elevation to frequency (6000Hz for high elevation with +10dB amplitude, adjusted accordingly for lower elevations) and various distance-to-sound parameter relationships.

Key Findings

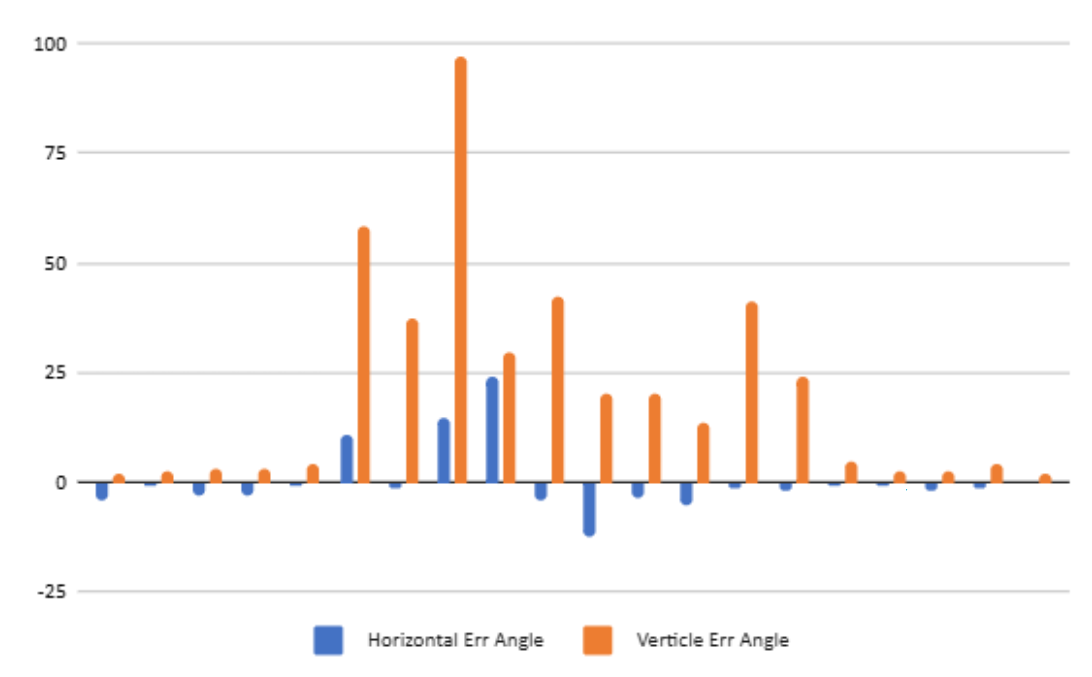

Preliminary results demonstrate that in stationary testing conditions, subjects exhibited approximately twice the accuracy in horizontal position determination compared to vertical position identification when utilizing Meta's native HRTFs. This indicates a significant disparity in spatial audio perception between horizontal and vertical planes.

Impact and Applications

This ongoing research holds substantial potential for assistive technology, enabling enhanced independent spatial navigation for visually impaired individuals through intuitive auditory feedback, as well as safety applications providing supplementary spatial awareness systems with 360-degree environmental perception. The research continues to progress, with implications for both assistive technology development and broader applications in spatial awareness enhancement.